Predicting Engagement of ChatGPT-related Tweets: A Machine Learning Approach

Use a DistilBERT transformer pre-trained by about 500,000 tweets about ChatGPT

As a follow-up to my previous articles,

How to use Machine Learning to write engaging titles for Medium articles? (substack.com)

here I have prepared a similar study using the dataset with about 500,000 ChatGPT-related tweets and their engagement. The dataset is publicly available on Kaggle. Full details of the analysis can be found in this public Kaggle notebook.

Step 1 — data preprocessing

Here, data preprocessing consists of the following steps:

replacing URLs with a special <URL> token;

removing tweets exceeding 280 characters;

selecting the label for binary classification based on whether the recorded sum of likes and comments of a given tweet exceeds 15 (selected as the top 5% of the overall engagement);

random oversampling of the minority class — tweets with positive engagement.

As a result, the cleaned tweet dataset consists of about 900,000 sample tweets.

Step 2 — loading and training the model

For training, I use the distilbert-base-cased model that contains about 66 million trainable parameters.

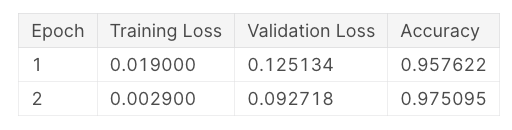

After training the full sample during 2 epochs (the process takes about 4 hours of NVIDIA TESLA P100 GPU available for Kaggle users), the weighted accuracy has increased from 50% (obtained from random oversampling) to about 97.5%:

Step 3 — save the model to Huggingface

After checking the model performance with a few selected examples, the model is saved to the Huggingface repository.

An example Python code of how to use the model:

import pandas as pd

import torch

from transformers import AutoTokenizer, AutoModelForSequenceClassification

pd.set_option('display.width', 1000)

pd.set_option('display.max_colwidth', 100)

repo_id = "dima806/chatgpt-tweets-engagement"

tokenizer = AutoTokenizer.from_pretrained(repo_id)

model = AutoModelForSequenceClassification.from_pretrained(repo_id)

tweets = [

"Unlock the power of language with ChatGPT! 🚀✨",

"Need a creative spark? ChatGPT is here to ignite your imagination! 🔥",

"Discover the limitless possibilities of conversational AI with ChatGPT.",

"Get ready to have your mind blown by ChatGPT's incredible language capabilities!",

"Want to have a chat with an AI? ChatGPT is your go-to companion!",

"Experience the future of natural language processing with ChatGPT.",

"ChatGPT is your personal AI assistant, ready to help and entertain!",

"Say hello to ChatGPT, your friendly neighborhood language model. 👋",

"Need some writing inspiration? ChatGPT has got you covered!",

"Say goodbye to writer's block and let ChatGPT unleash your creativity.",

"ChatGPT: Making AI conversations feel more human, one interaction at a time.",

"Join the conversation with ChatGPT and see where it takes you!",

"Interact with ChatGPT and embark on a journey of knowledge and discovery.",

"Ask ChatGPT anything and prepare to be amazed by its insightful responses!",

"Discover the power of ChatGPT and its ability to understand and generate text.",

"ChatGPT is the bridge between humans and artificial intelligence. 🌉",

"Experience the next level of virtual communication with ChatGPT.",

"Engage in thought-provoking discussions with ChatGPT and broaden your horizons.",

"Let ChatGPT be your language companion, always there to lend a helping hand.",

"Experience the magic of ChatGPT as it brings words to life!",

"Have a conversation that feels like it's with a human, thanks to ChatGPT's natural language understanding."

]

def get_sorted_results(tweets):

# Tokenize the input texts

encoded_inputs = tokenizer(tweets, padding=True, return_tensors="pt")

# Perform inference on the tokenized inputs

with torch.no_grad():

logits = model(**encoded_inputs).logits

# Get the predicted class probabilities

probs = torch.softmax(logits, dim=1).squeeze()

last_class_probs = probs[:, -1].tolist()

# Create a DataFrame to store the results

results_df = pd.DataFrame({

"Title": tweets,

"Engaged probability": last_class_probs,

})

# Sort the resulting DataFrame based on predicted probability

return results_df.sort_values("Engaged probability", ascending=False)

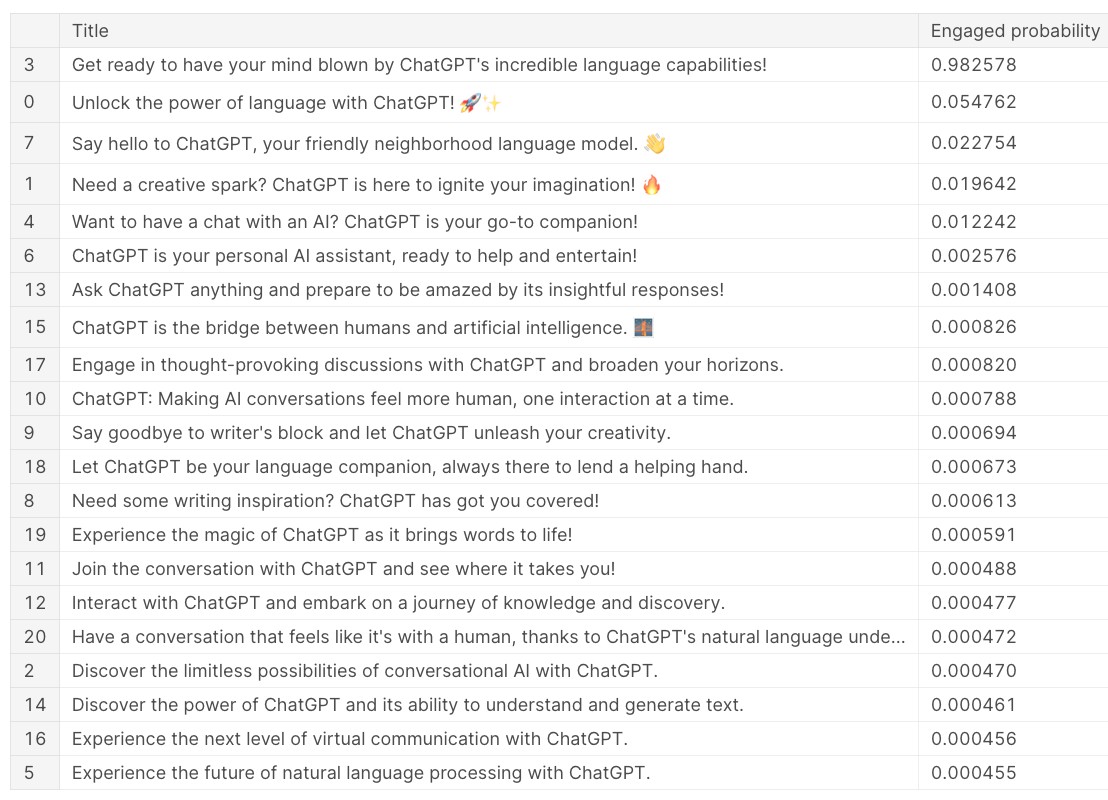

get_sorted_results(tweets)which results in the following output (sorted by predicted engagement scores):

I hope these results can be useful for you. In case of questions/comments, do not hesitate to write in the comments below or reach me directly through LinkedIn or Twitter.